Introduction:

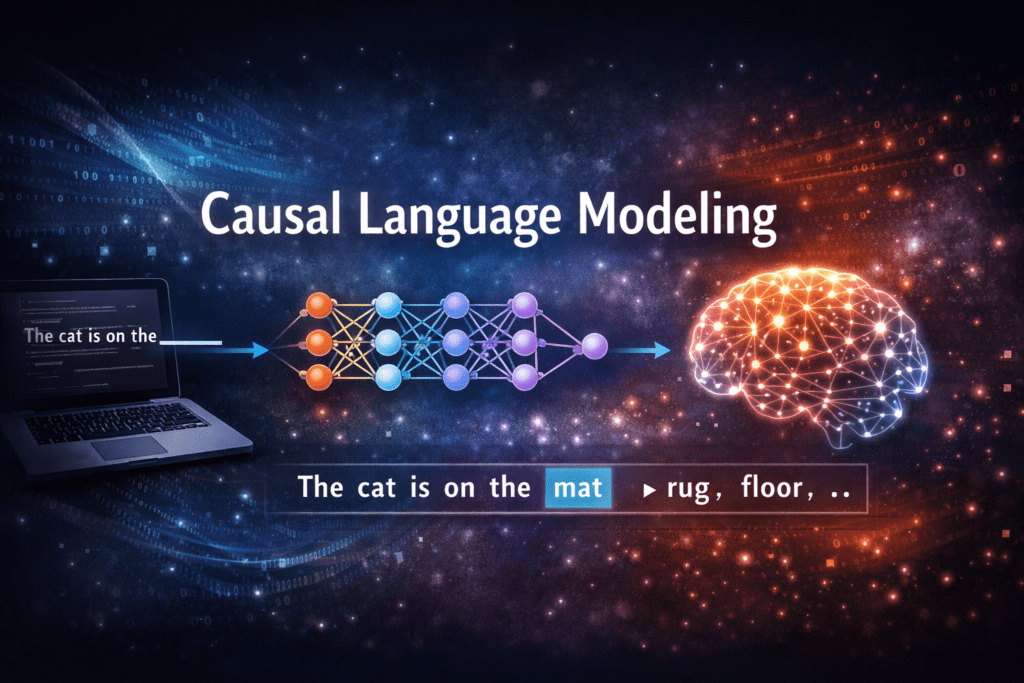

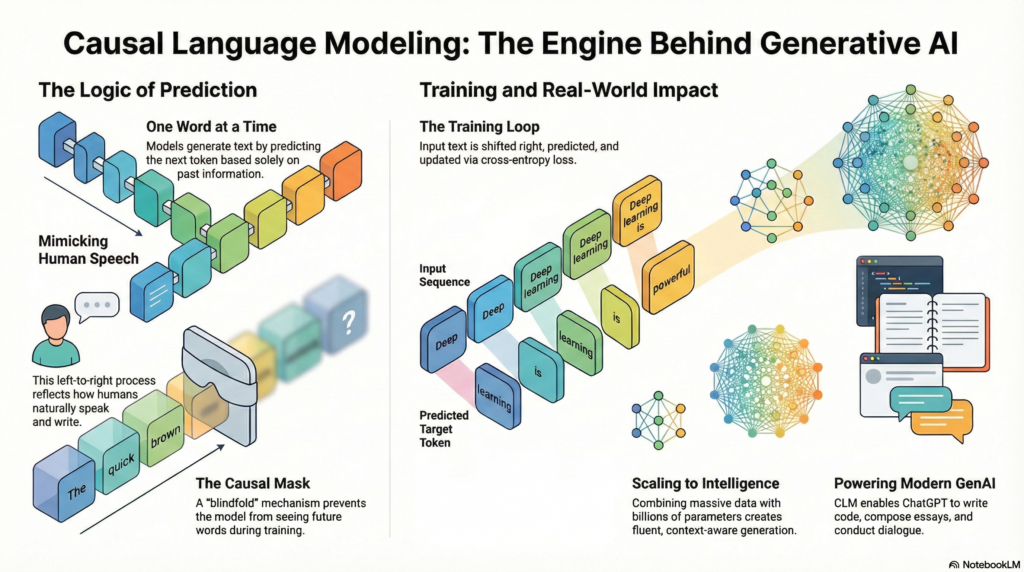

Causal Language Modeling (CLM) is a fundamental training objective used in

modern generative AI models such as GPT. It teaches a model how to generate text one word at a time, based only on past information.

Causal Language Modeling is the core learning objective behind modern

generative AI systems. It enables models to generate coherent, context-aware text by predicting the next token based solely on previous tokens.

What Is Causal Language Modeling?

Causal Language Modeling is a type of language modeling where the model predicts

the next token in a sequence, using only the previous tokens.

Causal Language Modeling is a technique in Natural Language Processing (NLP)

where a model predicts the next word in a sentence using only the previous words.

The model reads text from left to right and never looks at future words.

Simple Definition:

Causal Language Modeling is a method where an AI model learns to

generate text by predicting the next word based only on past words.

Why Is It Called “Causal”?

The term causal means:

● Future tokens must not influence past prediction

Each word depends only on earlier words

This mimics how humans naturally speak and write.

Example:

“I am learning machine _”

The model predicts:

● “learning”

● “intelligence”

● “models”

…but it cannot see future words.

Mathematical View (Intuition):

A sentence probability is modeled as:

P(w1,w2,…,wn)=∏i=1nP(wi∣w1,…,wi−1)P(w_1, w_2, …, w_n) = \prod_{i=1}^{n} P(w_i

\mid w_1, …, w_{i-1})P(w1,w2,…,wn)=i=1∏nP(wi∣w1,…,wi−1)

Each word depends only on previous words, never on future ones

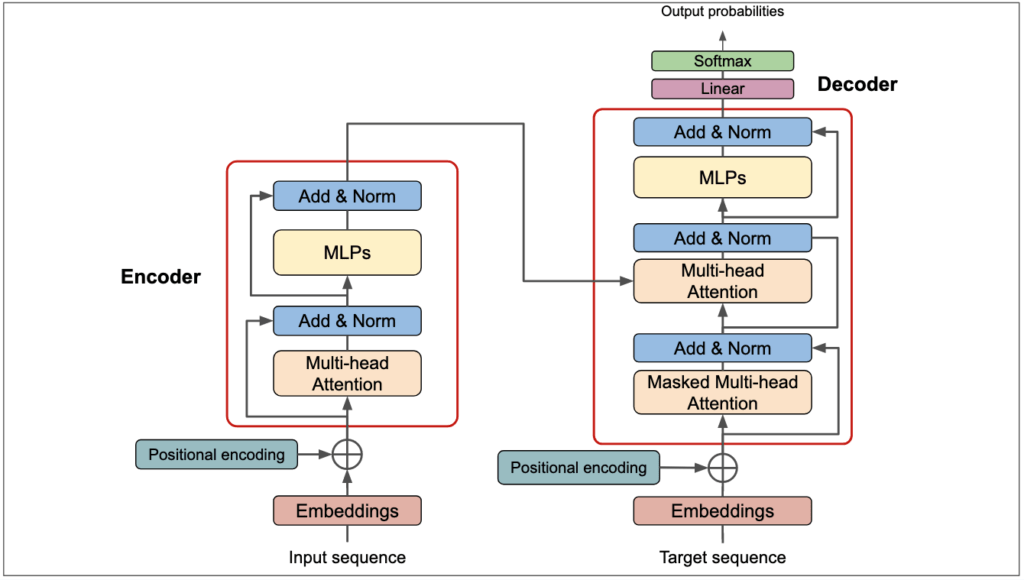

Role of Causal Language Modeling in Transformers:

Causal Language Modeling plays a core role in Transformer-based architectures,

especially in GPT-style models.

In these models, the decoder-only Transformer uses causal language modeling to

generate text step by step.

A special mechanism called self-attention with causal masking ensures that:

● Each word can attend only to previous words

● Future words are completely hidden

This design allows the model to maintain the natural flow of language generation.

How Causal Language Modeling Works:

Step-by-Step Process:

- Input sentence is tokenized

- Tokens are shifted right

- Model predicts the next token

- Loss is calculated (cross-entropy)

- Parameters are updated

This process is repeated over large text corpora.

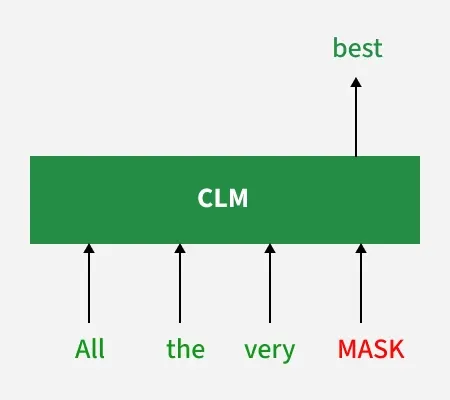

Role of Masking in CLM:

CLM uses causal masking (also called look-ahead masking).

Purpose:

● Prevents attention to future tokens

● Ensures left-to-right generation

In Transformers, this is implemented using a triangular attention mask.

Why CLM Is Important:

✔ Enables text generation

✔ Produces fluent sentences

✔ Scales well with large data

✔ Works naturally for dialogue and storytelling

CLM is the reason models like ChatGPT can:

● Write essays

● Answer questions

● Generate code

● Continue conversations

Challenges in Causal Language Modeling:

Despite its power, causal language modeling faces challenges:

● Bias in training data can affect outputs

● Hallucinations, where models generate incorrect facts

● High computational cost during training

Researchers continue to improve these models to make them safer and more reliable

Real-World Impact of Causal Language Modeling:

Causal language modeling has transformed many industries:

Education

● AI tutors

● Automated explanations

● Personalized learning content

Software Development

● Code completion

● Bug explanation

● Documentation generation

Business

● Customer support chatbots

● Email drafting

● Report summarization

CLM in Transformer Architecture:

In GPT-style models:

● Input embeddings are created

● Positional embeddings are added

● Causal self-attention is applied

● Output logits predict next token

This stack is repeated across many layers.

Applications of Causal Language Modeling:

● Chatbots

● Story generation

● Code generation

● Autocomplete systems

● AI assistants

Strengths of CLM:

● Simple training objective

● Highly scalable

● Strong generative ability

● Works well with massive datasets

Limitations of CLM:

● Cannot see full sentence context during prediction

● Less effective for classification tasks

● Can generate biased or incorrect text

CLM and Large Language Models (LLMs):

LLMs like GPT are trained using:

● Causal Language Modeling

● Massive text corpora

● Billions of parameters

More data + more parameters = better next-token prediction

Future of Causal Language Modeling:

The future of causal language modeling includes:

● Better reasoning abilities

● Lower training costs

● Improved factual accuracy

● Multimodal learning (text + images + audio)

As models improve, causal language modeling will remain a foundation technique in

AI.

Final Summary:

Causal Language Modeling is a simple idea with powerful results:

- Predict the next word

- Use only past context

- Repeat step by step