Introduction:

When humans learn, we often need only a few examples to understand a new task.

For example:

Show a child two pictures of a zebra, and they can recognize another zebra in

the wild.

AI models traditionally need thousands or millions of examples to perform well.

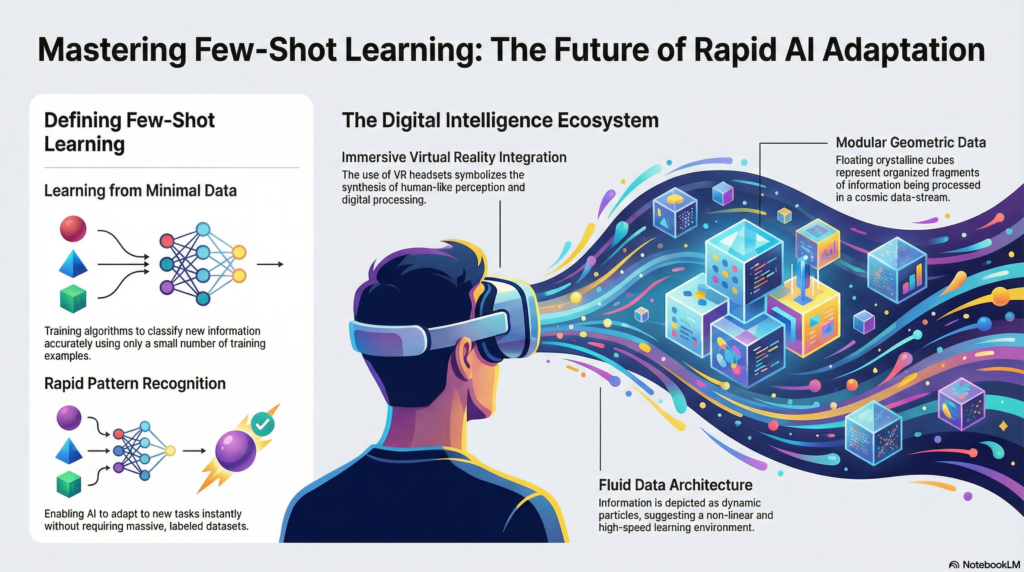

Few-Shot Learning (FSL) is a technique where AI models can learn a task with very

few examples — sometimes just 1, 2, or 5!

This makes AI more flexible, efficient, and closer to human-like learning.

Simple Definition:

Few-Shot Learning is a type of machine learning where a model can

perform a task after seeing only a few examples.

Other related terms:

- One-Shot Learning → model learns from 1 example

- Zero-Shot Learning → model can solve a task without any examples, only instructions

How Few-Shot Learning Works

Few-shot learning relies on models that already have pretrained knowledge.

Steps:

1.Pretraining:

The model is trained on a large dataset to understand general patterns (like

grammar, logic, or vision).

2.Providing Examples:

A few task-specific examples (called shots) are given.

3.Task Execution:

The model uses its pretrained knowledge + few examples to perform the task.

Why Few-Shot Learning Matters

Few-Shot Learning is important because it solves several challenges:

1.Limited Data – Many real-world tasks don’t have huge datasets.

For example:

- Rare diseases in medical imaging

- Niche languages for translation

2.Time and Cost Efficiency – Annotating thousands of samples is expensive.

Few-shot reduces the need for massive labeling.

3.Rapid Adaptation – AI can quickly adjust to new tasks without retraining from

scratch.

Why Few-Shot Learning is Important

- Reduces data requirements → Less labeled data needed

- Saves time & cost → No need to retrain huge models

- Flexibility → Model can adapt to new tasks quickly

- Human-like learning → Learns from small examples

Few-Shot vs Zero-Shot vs Many-Shot

| Criteria | Zero-Shot Learning | Few-Shot Learning | Many-Shot Learning |

|---|---|---|---|

| Definition | Model performs a task without seeing labeled examples for that specific task | Model learns from a small number of labeled examples (1–5 per class) | Model learns from a large number of labeled examples |

| Training Data | No task-specific labeled data | Very limited labeled data | Large labeled dataset |

| Learning Approach | Relies on pre-trained knowledge and semantic understanding | Uses similarity, embeddings, or meta-learning | Traditional supervised learning |

| Data Requirement | 0 examples per class | K examples per class (K-shot) | Hundreds or thousands per class |

| Model Dependency | Strongly depends on large pre-trained models | Depends on embedding quality and distance metrics | Depends on dataset size and quality |

| Training Time | No additional task training | Very fast adaptation | Longer training time |

| Example | Classifying a new object using only text description | Recognizing a new animal with 3 images | Image classification with 10,000 labeled images |

| Use Case | NLP tasks, prompt-based models | Medical imaging, rare object detection | Large-scale image or speech recognition |

How Models Achieve Few-Shot Learning

Few-shot learning is often implemented with large pretrained models like GPT, BERT,

or CLIP.

Techniques include:

1.Prompting:

Give the model examples + instruction in natural language.

2.Meta-Learning:

Train the model to learn how to learn so it adapts quickly to new tasks.

For vision tasks, compare new inputs to examples in a high-dimensional space.

Few-Shot Learning: Intelligence from minimal data:

Real-World Applications

1.Chatbots:

Answer new types of questions with a few example dialogues.

2.Content Moderation:

Detect new types of harmful content with just a few labeled samples.

3.Medical Diagnosis:

Identify rare diseases with very few patient records.

4.Language Translation:

Translate rare languages using just a few example sentences.

5.Image Classification:

Recognize new products or objects without massive training datasets.

Types of Few-Shot Learning:

1.One-Shot Learning

2.Few-Shot Learning

3.Zero-Shot Learning

Advantages:

- Less labeled data required

- Faster adaptation to new tasks

- Reduces computational cost

- Makes AI more human-like

Limitations:

- Performance depends on pretrained knowledge

- Can struggle with very complex tasks

- Examples must be high quality

- May be sensitive to the way examples are presented

Simple Human Analogy

Imagine teaching someone to recognize a rare bird:

- Traditional ML: Show 10,000 pictures

- Few-shot: Show 5 good pictures, they learn

- Zero-shot: Tell them a detailed description, they try to identify it

Humans are naturally few-shot learners — AI is catching up!

Few-Shot Learning Techniques

1.Prompting (Mostly in NLP)

- Give the model a few input-output examples in the text prompt.

- The model generalizes from these examples.

Example: Sentiment Analysis

Review: “The movie was amazing.” → Positive

Review: “The film was boring.” → Negative

Review: “The storyline is interesting.” → ?

The model predicts Positive for the last review.

2.Meta-Learning (“Learning to Learn”)

- Model is trained to adapt quickly to new tasks with few examples.

- Idea: Learn how to learn instead of learning one task.

Example:

- Train on multiple tasks → model can perform unseen tasks using only a few examples.

3.Metric Learning / Embedding-Based

- Represent inputs in a vector space.

- Compare new examples to known examples using similarity.

Example in Vision:

- Image of a new animal is converted into a vector.

- Compare with vectors of known animals to classify it.

4.Fine-Tuning Pretrained Models

- Use pretrained models (like GPT) and fine-tune on a few examples.

- This allows leveraging general knowledge while adapting to a new task.

Conclusion

Few-Shot Learning is revolutionizing AI by enabling models to learn like humans

efficiently, quickly, and flexibly.

With pretrained models, clever prompting, and meta-learning techniques, AI can

now solve tasks with very few examples, opening doors to applications in healthcare,

NLP, vision, and more.