Table of Contents

ToggleIntroduction.

In today’s fast-paced software development world, consistency, speed, and portability have become essential pillars of modern engineering. Docker has emerged as a revolutionary tool that addresses these needs by allowing developers to build, package, and ship applications in lightweight, portable containers. At the core of this ecosystem are two fundamental components: the Dockerfile and Docker Compose. Understanding how they work together and how to apply them in different scenarios is critical for any developer, DevOps engineer, or system architect striving to build reliable, scalable environments.

A Dockerfile acts as the blueprint for building container images it defines every instruction the Docker engine follows to assemble an application image. It specifies the base image, copies application files, installs dependencies, and sets up configurations, ensuring that the resulting container runs exactly the same on any machine. The Dockerfile brings determinism and automation to the build process, eliminating the traditional “works on my machine” problem that has long plagued developers. By writing efficient Dockerfiles, teams can reduce image size, improve security, and speed up deployment times across various stages of software delivery.

On the other hand, Docker Compose is designed to orchestrate multi-container environments with simplicity and elegance. Real-world applications rarely operate as a single service most rely on a combination of backend APIs, databases, caches, and message brokers. Compose allows you to define and run these interconnected services using a single YAML configuration file. With one command, you can bring up an entire stack, link services, share networks, and inject environment variables seamlessly. This is particularly useful for local development, integration testing, and staging environments where replicating production conditions is essential.

When used together, Dockerfiles and Docker Compose unlock a powerful workflow that supports every stage of the application lifecycle. Developers can build consistent development environments, testers can spin up reproducible test systems, and operations teams can streamline deployment pipelines. The synergy between these two tools promotes modularity each service has its own Dockerfile and configuration, while Compose orchestrates how they communicate. This approach aligns perfectly with the rise of microservices architectures, continuous integration, and cloud-native development patterns.

In this blog on “Dockerfile & Compose Scenarios,” we will explore real-world use cases and practical setups that demonstrate how these tools can be applied effectively. From creating simple web application containers to managing complex, distributed systems, we’ll walk through scenarios that highlight best practices, optimization techniques, and common pitfalls. You’ll learn how to write secure and efficient Dockerfiles, leverage multi-stage builds, manage environment variables, and organize Compose configurations for clarity and scalability.

We’ll also look at advanced setups such as running reverse proxies, building full-stack environments, using Compose for local CI/CD testing, and migrating Compose configurations to Kubernetes. Whether you’re new to containers or an experienced DevOps professional, understanding Dockerfile and Compose scenarios will empower you to build, deploy, and maintain applications more effectively. The knowledge gained here will help you optimize build performance, ensure cross-platform compatibility, and maintain clean, maintainable configurations that scale gracefully as your systems grow.

Ultimately, mastering these tools means mastering a modern approach to software delivery one built on automation, reproducibility, and collaboration. As we explore different scenarios, you’ll see how Dockerfile and Compose serve as the foundation for a containerized workflow that bridges the gap between development and production. By the end of this exploration, you’ll not only know how to write better Dockerfiles and Compose files but also how to n your entire development and deployment process around them for maximum efficiency and reliability.

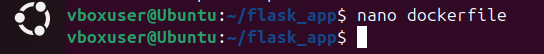

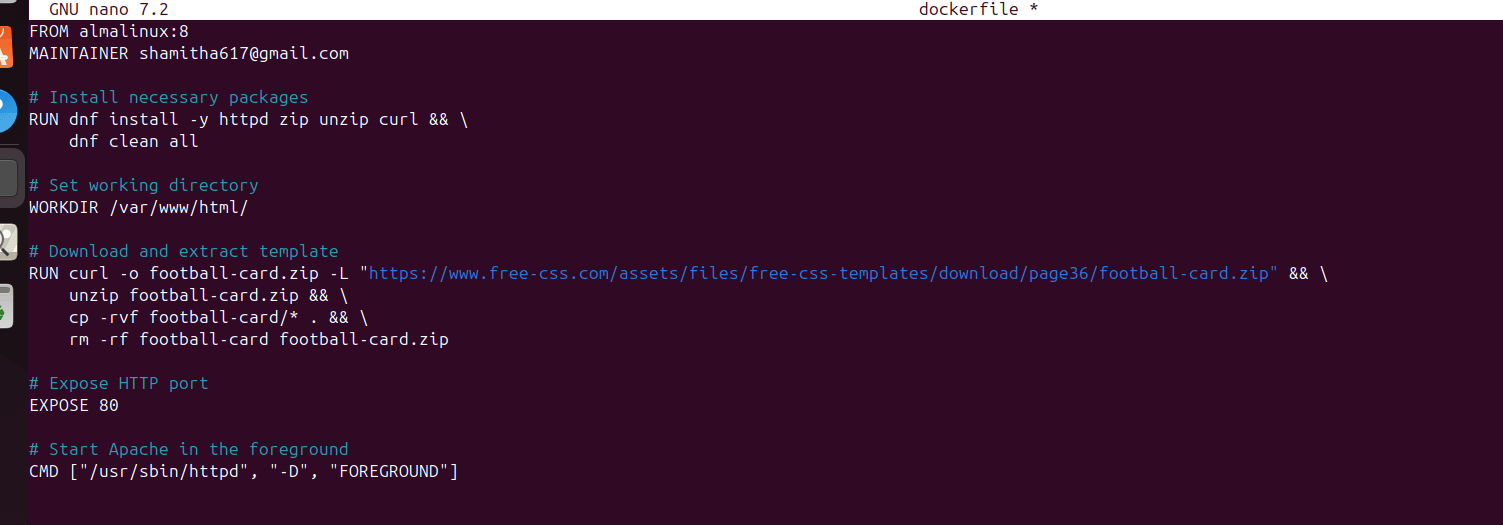

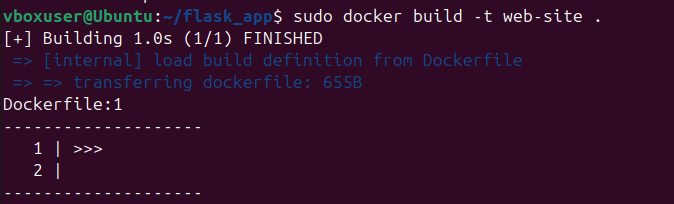

1. Scenario: Build an image using Dockerfile for a simple html app.

FROM almalinux:8

MAINTAINER Entermailid@gmail.com

# Install necessary packages

RUN dnf install -y httpd zip unzip curl && \

dnf clean all

# Set working directory

WORKDIR /var/www/html/

# Download and extract template

RUN curl -o football-card.zip -L "https://www.free-css.com/assets/files/free-css-templates/download/page36/football-card.zip" && \

unzip football-card.zip && \

cp -rvf football-card/* . && \

rm -rf football-card football-card.zip

# Expose HTTP port

EXPOSE 80

# Start Apache in the foreground

CMD ["/usr/sbin/httpd", "-D", "FOREGROUND"]

➤ Command: docker build -t web-site .

2. Scenario: Use Docker Compose to bring up a multi-container app.

Running an Application Using Docker Compose (Simple Example)

Let’s say you have a Python Flask app that connects to a Redis database. Instead of running multiple docker run commands, you can use docker-compose.yml to define and manage both services together.

Step 1: Project Structure

my-flask-app/

├── app.py # Flask application

├── requirements.txt

└── docker-compose.yml

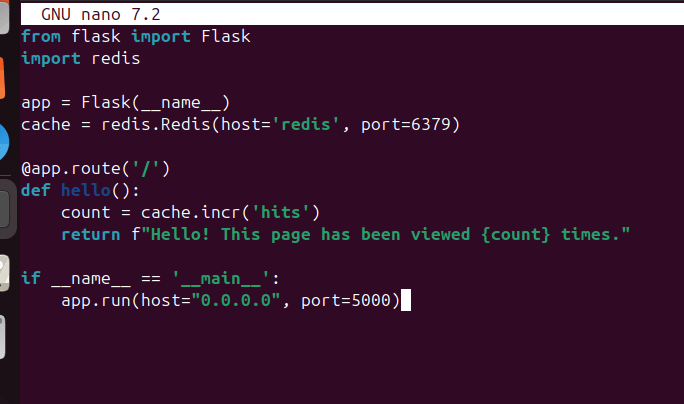

1. app.py (Flask Application)

from flask import Flask

import redis

app = Flask(__name__)

cache = redis.Redis(host='redis', port=6379)

@app.route('/')

def hello():

count = cache.incr('hits')

return f"Hello! This page has been viewed {count} times."

if __name__ == '__main__':

app.run(host="0.0.0.0", port=5000)

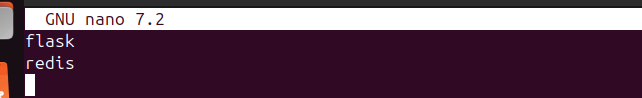

2. requirements.txt

flask

redis

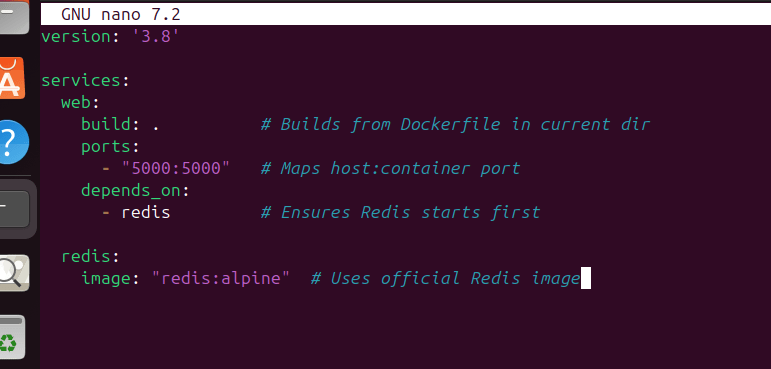

Step 2: Create docker-compse.yml.

version: '3.8'

services:

web:

build: . # Builds from Dockerfile in current dir

ports:

- "5000:5000" # Maps host:container port

depends_on:

- redis # Ensures Redis starts first

redis:

image: "redis:alpine" # Uses official Redis image

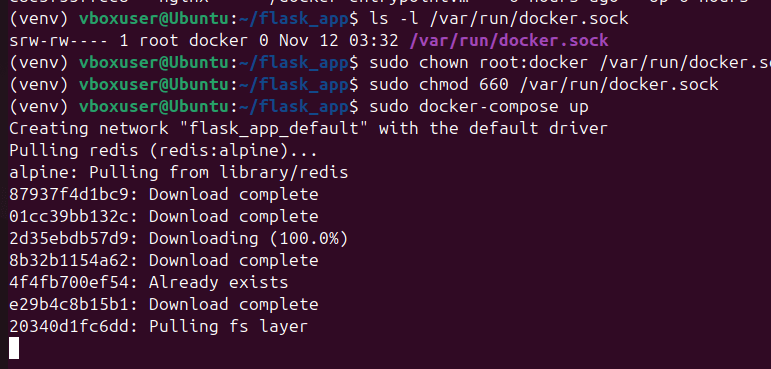

Step 3: Run the Application.

- Build and start services (Flask + Redis):

- docker-compose up

- Runs both services in the foreground (logs visible).

- Use

-dto run in detached mode:docker-compose up -d

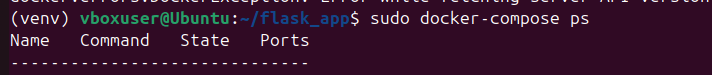

2. Check running services:

- docker-compose ps

| Name | Command | State | Ports |

| my-flask-app-web | python app.py | Up | 0.0.0.0:5000->5000/tcp |

| my-flask-app-redis | docker-entrypoint.sh | Up | 6379/tcp |

3. Access the Flask app:

Open http://localhost:5000 in your browser.

Each refresh increments the Redis counter!

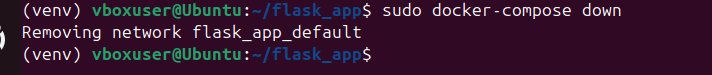

4. Stop the services:

- docker-compose down

- Stops and removes containers, networks, and volumes (if any).

Now try running your own multi-container app!

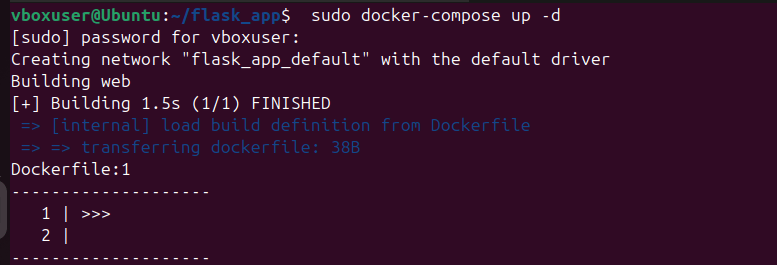

➤ *Command:* `docker-compose up -d`

Security and Resource Control Scenarios.

Scenario: Limit memory usage of a container to 200MB.

➤ Command: docker run -d --memory=200m myapp

Scenario: Limit CPU usage of a container.

➤ Command: docker run -d --cpus=1 myapp

Troubleshooting & Maintenance Scenarios.

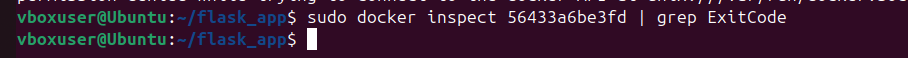

Scenario: Container keeps crashing. Investigate exit code.

➤ Command: docker inspect <container_id> | grep ExitCode

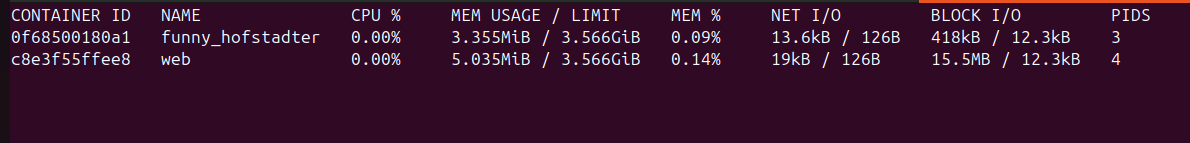

Scenario: Monitor container resource usage.

➤ Command: docker stats

Scenario: Find IP address of a container.

➤ Command: docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' web

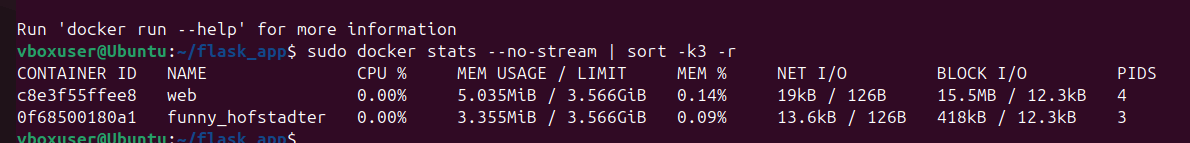

Scenario: Identify containers with high CPU.

➤ Command: docker stats --no-stream | sort -k3 -r

Scenario: Copy logs or config from container to host.

➤ Command: docker cp <container_id>:/app/logs ./logs

Conclusion.

Creating your first Dockerfile marks the beginning of a journey into the world of containerization. By breaking down your application into simple, reproducible layers, Docker allows you to package your code and its environment into a portable container that can run consistently anywhere. In this guide, you learned how to write a basic Dockerfile, understand its key instructions, build an image, and run a container.

The real power of Docker comes from the efficiency, scalability, and consistency it brings to development and deployment workflows. While this introduction covers the essentials, Docker offers a vast ecosystem of tools from Docker Compose for multi-container applications to Docker Hub for sharing images that can help you manage complex applications with ease.

Starting small, experimenting, and iterating on your Dockerfiles will build your confidence and deepen your understanding. With these foundational skills, you’re now ready to explore more advanced containerization strategies, optimize your images, and leverage Docker to streamline development and deployment.

Containers may seem like a simple technology at first glance, but mastering them opens the door to modern, efficient, and scalable software development. Your first Dockerfile is just the beginning one container at a time, you’re stepping into the future of application deployment.

- For more information about Docker, you can refer to Jeevi’s page.